Writing query results

This document describes how to write query results to temporary or permanent tables.

Temporary and permanent tables

BigQuery saves all query results to a table, which can be either permanent or temporary.

-

BigQuery uses temporary tables to cache query results that aren't written to a permanent table. The tables are created in a special dataset and named randomly. You can also create temporary tables for your own use within multi-statement queries and sessions . You aren't charged for temporary cached query result tables . You are charged for temporary tables that aren't cached query results.

-

After a query finishes, the temporary table exists for up to 24 hours. To view table structure and data, do the following:

-

Go to the BigQuerypage.

-

In the left pane, click Explorer:

If you don't see the left pane, click Expand left paneto open the pane.

-

In the Explorerpane, click Job history.

-

Click Personal history.

-

Choose the query that created the temporary table. Then, in the Destination tablerow, click Temporary table.

-

-

Access to the temporary table data is restricted to the user or service account that created the query job.

-

You cannot share temporary tables, and they are not visible using any of the standard list or other table manipulation methods. If you need to share your query results, write the results to a permanent table, download them, or share them though Google Sheets or Google Drive.

-

Temporary tables are created in the same region as the table or tables being queried.

-

A permanent table can be a new or existing table in any dataset to which you have access. If you write query results to a new table, you are charged for storing the data. When you write query results to a permanent table, the tables you're querying must be in the same location as the dataset that contains the destination table.

-

You can't save query results in a temporary table when the domain-restricted organization policy is enabled. As a workaround, temporarily disable the domain-restricted organization policy, run the query, and then again enable the policy. Alternatively, you can save query results in a destination table.

Required permissions

At a minimum, to write query results to a table, you must be granted the following permissions:

-

bigquery.tables.createpermissions to create a new table -

bigquery.tables.updateDatato write data to a new table, overwrite a table, or append data to a table -

bigquery.jobs.createto run a query job

Additional permissions such as bigquery.tables.getData

may be required to

access the data you're querying.

The following predefined IAM roles include both bigquery.tables.create

and bigquery.tables.updateData

permissions:

-

bigquery.dataEditor -

bigquery.dataOwner -

bigquery.admin

The following predefined IAM roles include bigquery.jobs.create

permissions:

-

bigquery.user -

bigquery.jobUser -

bigquery.admin

In addition, if a user has bigquery.datasets.create

permissions, when that

user creates a dataset, they are granted bigquery.dataOwner

access to it. bigquery.dataOwner

access gives the user the ability to create and

update tables in the dataset.

For more information on IAM roles and permissions in BigQuery, see Predefined roles and permissions .

Write query results to a permanent table

When you write query results to a permanent table, you can create a new table, append the results to an existing table, or overwrite an existing table.

Writing query results

Use the following procedure to write your query results to a permanent table. To help control costs, you can preview data before running the query.

Console

-

Open the BigQuery page in the Google Cloud console.

-

In the left pane, click Explorer:

If you don't see the left pane, click Expand left paneto open the pane.

-

In the Explorerpane, expand your project, click Datasets, and then select a dataset.

-

In the query editor, enter a valid SQL query.

-

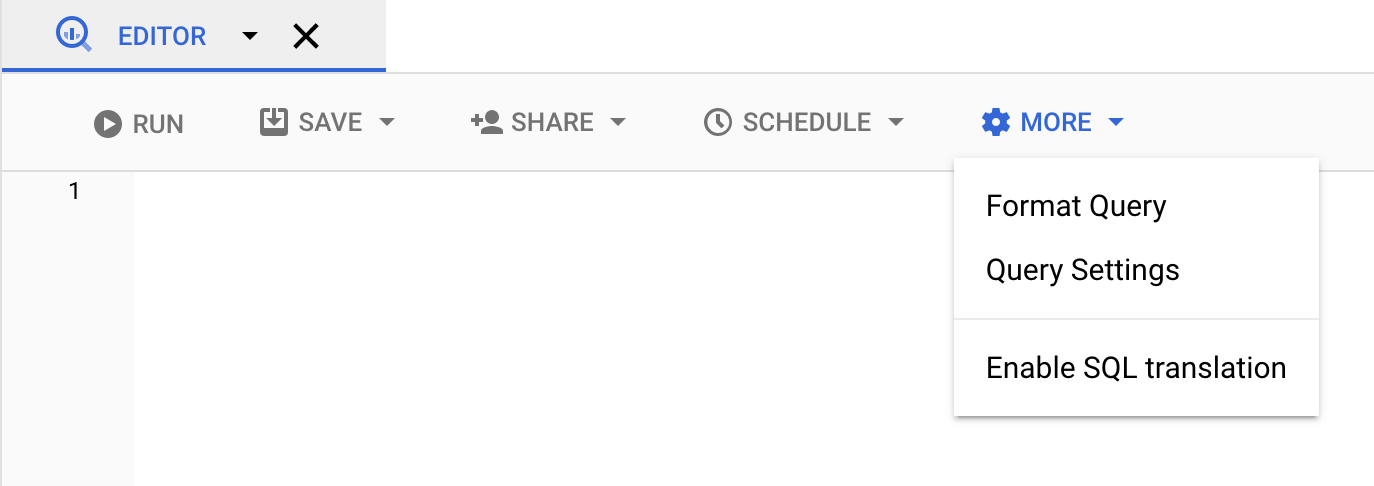

Click Moreand then select Query settings.

-

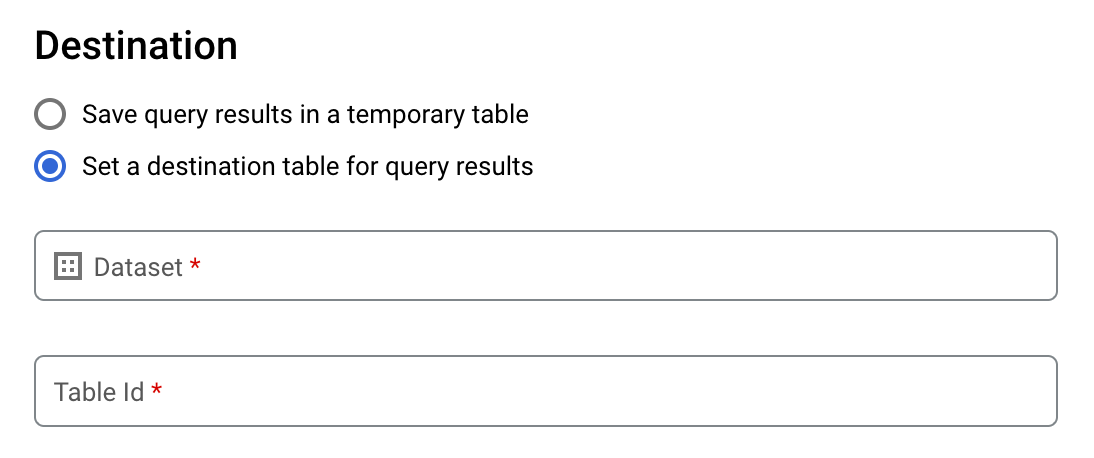

Select the Set a destination table for query resultsoption.

-

In the Destinationsection, select the Datasetin which you want to create the table, and then choose a Table Id.

-

In the Destination table write preferencesection, choose one of the following:

- Write if empty— Writes the query results to the table only if the table is empty.

- Append to table— Appends the query results to an existing table.

- Overwrite table— Overwrites an existing table with the same name using the query results.

-

Optional: For Data location, choose your location .

-

To update the query settings, click Save.

-

Click Run. This creates a query job that writes the query results to the table you specified.

Alternatively, if you forget to specify a destination table before running your query, you can copy the cached results table to a permanent table by clicking the Save Results button above the editor.

SQL

The following example uses the CREATE TABLE

statement

to create the trips

table from data in the public bikeshare_trips

table:

-

In the Google Cloud console, go to the BigQuerypage.

-

In the query editor, enter the following statement:

CREATE TABLE mydataset . trips AS ( SELECT bike_id , start_time , duration_minutes FROM bigquery - public - data . austin_bikeshare . bikeshare_trips );

-

Click Run.

For more information about how to run queries, see Run an interactive query .

For more information, see Creating a new table from an existing table .

bq

-

In the Google Cloud console, activate Cloud Shell.

At the bottom of the Google Cloud console, a Cloud Shell session starts and displays a command-line prompt. Cloud Shell is a shell environment with the Google Cloud CLI already installed and with values already set for your current project. It can take a few seconds for the session to initialize.

-

Enter the

bq querycommand and specify the--destination_tableflag to create a permanent table based on the query results. Specify theuse_legacy_sql=falseflag to use GoogleSQL syntax. To write the query results to a table that is not in your default project, add the project ID to the dataset name in the following format:project_id : dataset.Optional: Supply the

--locationflag and set the value to your location .To control the write disposition for an existing destination table, specify one of the following optional flags:

-

--append_table: If the destination table exists, the query results are appended to it. -

--replace: If the destination table exists, it is overwritten with the query results.bq --location = location query \ --destination_table project_id : dataset . table \ --use_legacy_sql = false ' query '

Replace the following:

-

locationis the name of the location used to process the query. The--locationflag is optional. For example, if you are using BigQuery in the Tokyo region, you can set the flag's value toasia-northeast1. You can set a default value for the location by using the.bigqueryrcfile . -

project_idis your project ID. -

datasetis the name of the dataset that contains the table to which you are writing the query results. -

tableis the name of the table to which you're writing the query results. -

queryis a query in GoogleSQL syntax.If no write disposition flag is specified, the default behavior is to write the results to the table only if it is empty. If the table exists and it is not empty, the following error is returned:

BigQuery error in query operation: Error processing job project_id :bqjob_123abc456789_00000e1234f_1: Already Exists: Table project_id:dataset.table.Examples:

Enter the following command to write query results to a destination table named

mytableinmydataset. The dataset is in your default project. Since no write disposition flag is specified in the command, the table must be new or empty. Otherwise, anAlready existserror is returned. The query retrieves data from the USA Name Data public dataset .bq query \ --destination_table mydataset.mytable \ --use_legacy_sql = false \ 'SELECT name, number FROM `bigquery-public-data`.usa_names.usa_1910_current WHERE gender = "M" ORDER BY number DESC'

Enter the following command to use query results to overwrite a destination table named

mytableinmydataset. The dataset is in your default project. The command uses the--replaceflag to overwrite the destination table.bq query \ --destination_table mydataset.mytable \ --replace \ --use_legacy_sql = false \ 'SELECT name, number FROM `bigquery-public-data`.usa_names.usa_1910_current WHERE gender = "M" ORDER BY number DESC'

Enter the following command to append query results to a destination table named

mytableinmydataset. The dataset is inmy-other-project, not your default project. The command uses the--append_tableflag to append the query results to the destination table.bq query \ --append_table \ --use_legacy_sql = false \ --destination_table my-other-project:mydataset.mytable \ 'SELECT name, number FROM `bigquery-public-data`.usa_names.usa_1910_current WHERE gender = "M" ORDER BY number DESC'

The output for each of these examples looks like the following. For readability, some output is truncated.

Waiting on bqjob_r123abc456_000001234567_1 ... (2s) Current status: DONE +---------+--------+ | name | number | +---------+--------+ | Robert | 10021 | | John | 9636 | | Robert | 9297 | | ... | +---------+--------+

-

API

To save query results to a permanent table, call the jobs.insert

method,

configure a query

job, and include a value for the destinationTable

property. To control the write disposition for an existing destination

table, configure the writeDisposition

property.

To control the processing location for the query job, specify the location

property in the jobReference

section of the job resource

.

Go

Before trying this sample, follow the Go setup instructions in the BigQuery quickstart using client libraries . For more information, see the BigQuery Go API reference documentation .

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries .

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries . For more information, see the BigQuery Java API reference documentation .

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries .

To save query results to a permanent table, set the destination table to the desired TableId in a QueryJobConfiguration .

Node.js

Before trying this sample, follow the Node.js setup instructions in the BigQuery quickstart using client libraries . For more information, see the BigQuery Node.js API reference documentation .

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries .

Python

Before trying this sample, follow the Python setup instructions in the BigQuery quickstart using client libraries . For more information, see the BigQuery Python API reference documentation .

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries .

To save query results to a permanent table, create a QueryJobConfig and set the destination to the desired TableReference . Pass the job configuration to the query method .