AI-generated Key Takeaways

-

Code snippets for simple responses can be imported, deployed, and explored in Dialogflow.

-

Simple responses appear as chat bubbles and can use text-to-speech (TTS) or SSML for audio.

-

Simple responses are supported on devices with audio or screen output capabilities and have a 640-character limit per chat bubble, with a recommendation of less than 300 characters.

-

Chat bubble content should be a phonetic subset or complete transcript of the TTS/SSML output, and a maximum of two chat bubbles are allowed per turn.

-

SSML can be used to add polish and enhance the user experience with features like sounds and pauses.

Try the snippets: Import, deploy, and explore code snippets in context using a new Dialogflow agent.

Explore in Dialogflow

Click Continue to import our Responses sample in Dialogflow. Then, follow the steps below to deploy and test the sample:

- Enter an agent name and create a new Dialogflow agent for the sample.

- After the agent is done importing, click Go to agent .

- From the main navigation menu, go to Fulfillment .

- Enable the Inline Editor , then click Deploy . The editor contains the sample code.

- From the main navigation menu, go to Integrations , then click Google Assistant .

- In the modal window that appears, enable Auto-preview changes and click Test to open the Actions simulator.

- In the simulator, enter

Talk to my test appto test the sample!

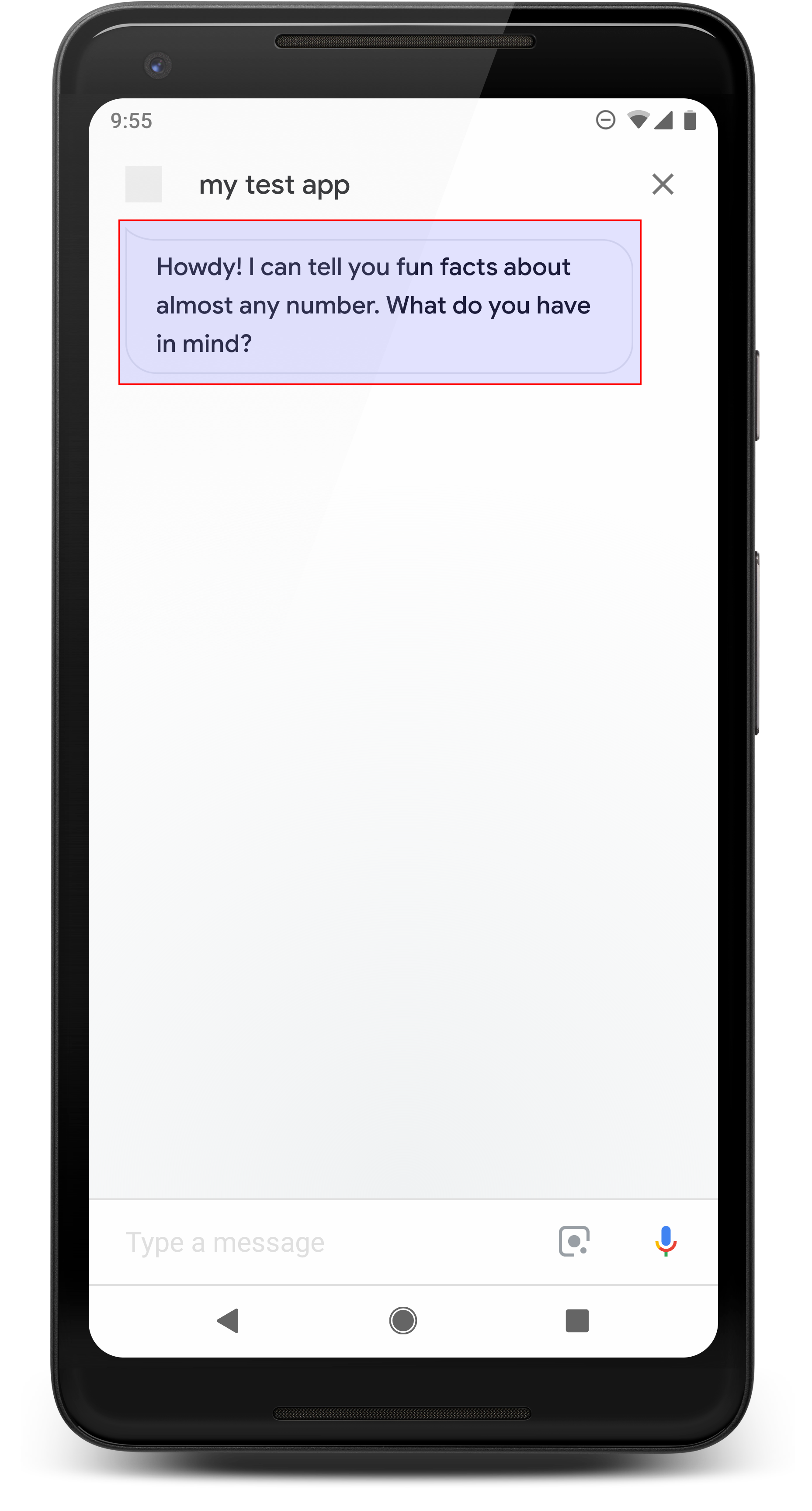

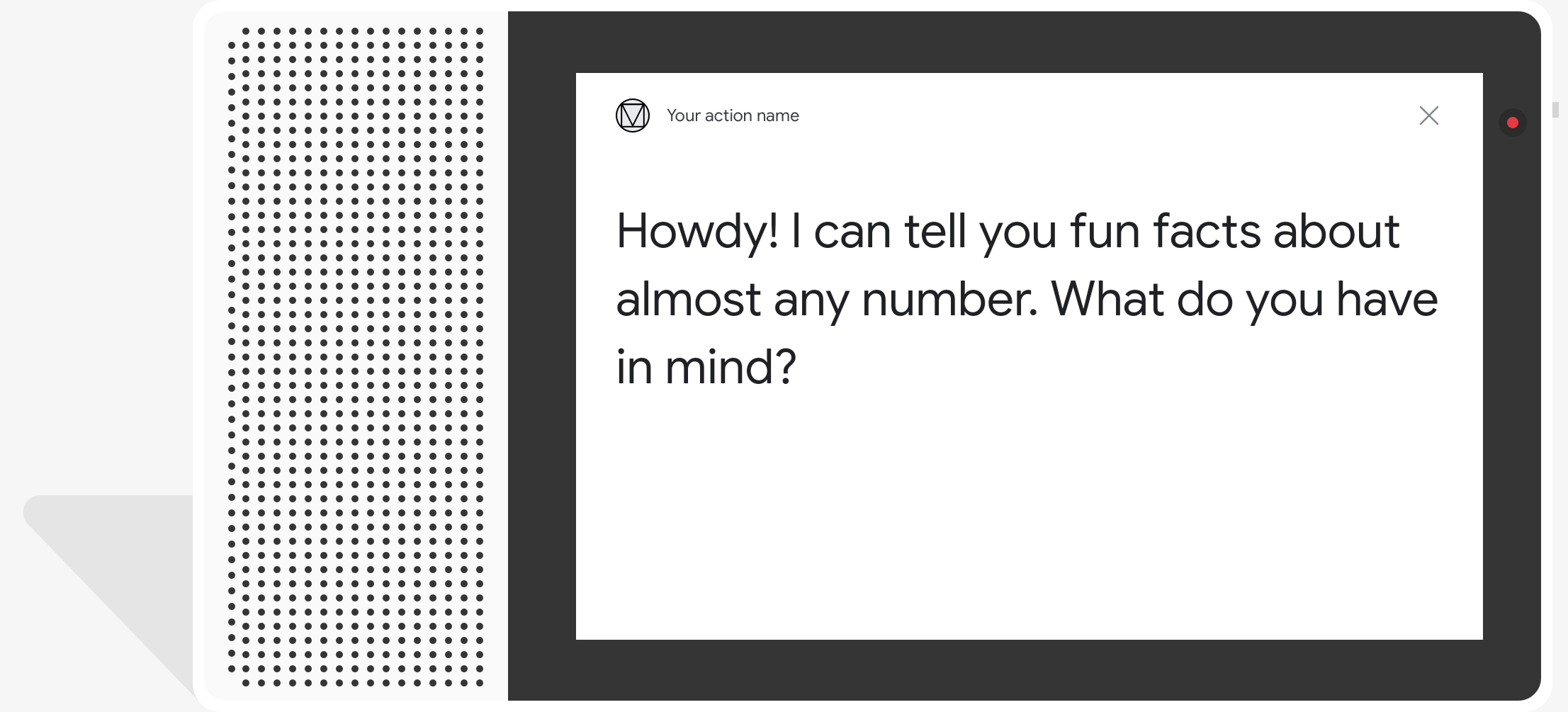

Simple responses take the form of a chat bubble visually and use text-to-speech (TTS) or Speech Synthesis Markup Language (SSML) for sound.

TTS text is used as chat bubble content by default. If the visual aspect of that text meets your needs, you won't need to specify any display text for a chat bubble.

You can also review our conversation design guidelines to learn how to incorporate these visual elements in your Action.

Properties

Simple responses have the following requirements and optional properties that you can configure:

- Supported on surfaces with the

actions.capability.AUDIO_OUTPUToractions.capability.SCREEN_OUTPUTcapabilities. -

640 character limit per chat bubble. Strings longer than the limit are truncated at the first word break (or whitespace) before 640 characters.

-

Chat bubble content must be a phonetic subset or a complete transcript of the TTS/SSML output. This helps users map out what you are saying and increases comprehension in various conditions.

-

At most two chat bubbles per turn.

-

Chat head (logo) that you submit to Google must be 192x192 pixels and cannot be animated.

Sample code

Node.js

app . intent ( 'Simple Response' , ( conv ) = > { conv . ask ( new SimpleResponse ({ speech : `Here's an example of a simple response. ` + `Which type of response would you like to see next?` , text : `Here's a simple response. ` + `Which response would you like to see next?` , })); });

Java

@ForIntent ( "Simple Response" ) public ActionResponse welcome ( ActionRequest request ) { ResponseBuilder responseBuilder = getResponseBuilder ( request ); responseBuilder . add ( new SimpleResponse () . setTextToSpeech ( "Here's an example of a simple response. " + "Which type of response would you like to see next?" ) . setDisplayText ( "Here's a simple response. Which response would you like to see next?" )); return responseBuilder . build (); }

Node.js

conv . ask ( new SimpleResponse ({ speech : `Here's an example of a simple response. ` + `Which type of response would you like to see next?` , text : `Here's a simple response. ` + `Which response would you like to see next?` , }));

Java

ResponseBuilder responseBuilder = getResponseBuilder ( request ); responseBuilder . add ( new SimpleResponse () . setTextToSpeech ( "Here's an example of a simple response. " + "Which type of response would you like to see next?" ) . setDisplayText ( "Here's a simple response. Which response would you like to see next?" )); return responseBuilder . build ();

JSON

Note that the JSON below describes a webhook response.

{ "payload" : { "google" : { "expectUserResponse" : true , "richResponse" : { "items" : [ { "simpleResponse" : { "textToSpeech" : "Here's an example of a simple response. Which type of response would you like to see next?" , "displayText" : "Here's a simple response. Which response would you like to see next?" } } ] } } } }

JSON

Note that the JSON below describes a webhook response.

{ "expectUserResponse" : true , "expectedInputs" : [ { "possibleIntents" : [ { "intent" : "actions.intent.TEXT" } ], "inputPrompt" : { "richInitialPrompt" : { "items" : [ { "simpleResponse" : { "textToSpeech" : "Here's an example of a simple response. Which type of response would you like to see next?" , "displayText" : "Here's a simple response. Which response would you like to see next?" } } ] } } } ] }

SSML and sounds

Using SSML and sounds in your responses gives them more polish and enhances the user experience. The following code snippets show you how to create a response that uses SSML:

Node.js

app . intent ( 'SSML' , ( conv ) = > { conv . ask ( `<speak>` + `Here are <say-as interpet-as="characters">SSML</say-as> examples.` + `Here is a buzzing fly ` + `<audio src="https://actions.google.com/sounds/v1/animals/buzzing_fly.ogg"></audio>` + `and here's a short pause <break time="800ms"/>` + `</speak>` ); conv . ask ( 'Which response would you like to see next?' ); });

Java

@ForIntent ( "SSML" ) public ActionResponse ssml ( ActionRequest request ) { ResponseBuilder responseBuilder = getResponseBuilder ( request ); responseBuilder . add ( "<speak>" + "Here are <say-as interpet-as=\"characters\">SSML</say-as> examples." + "Here is a buzzing fly " + "<audio src=\"https://actions.google.com/sounds/v1/animals/buzzing_fly.ogg\"></audio>" + "and here's a short pause <break time=\"800ms\"/>" + "</speak>" ); return responseBuilder . build (); }

Node.js

conv . ask ( `<speak>` + `Here are <say-as interpet-as="characters">SSML</say-as> examples.` + `Here is a buzzing fly ` + `<audio src="https://actions.google.com/sounds/v1/animals/buzzing_fly.ogg"></audio>` + `and here's a short pause <break time="800ms"/>` + `</speak>` ); conv . ask ( 'Which response would you like to see next?' );

Java

ResponseBuilder responseBuilder = getResponseBuilder ( request ); responseBuilder . add ( "<speak>" + "Here are <say-as interpet-as=\"characters\">SSML</say-as> examples." + "Here is a buzzing fly " + "<audio src=\"https://actions.google.com/sounds/v1/animals/buzzing_fly.ogg\"></audio>" + "and here's a short pause <break time=\"800ms\"/>" + "</speak>" ); return responseBuilder . build ();

JSON

Note that the JSON below describes a webhook response.

{ "payload" : { "google" : { "expectUserResponse" : true , "richResponse" : { "items" : [ { "simpleResponse" : { "textToSpeech" : "<speak>Here are <say-as interpet-as= \" characters \" > SSML</say-as> examples.Here is a buzzing fly <audio src= \" https://actions.google.com/sounds/v1/animals/buzzing_fly.ogg \" >< /audio>and here's a short pause <break time= \" 800ms \" /></speak>" } }, { "simpleResponse" : { "textToSpeech" : "Which response would you like to see next?" } } ] } } } }

JSON

Note that the JSON below describes a webhook response.

{ "expectUserResponse" : true , "expectedInputs" : [ { "possibleIntents" : [ { "intent" : "actions.intent.TEXT" } ], "inputPrompt" : { "richInitialPrompt" : { "items" : [ { "simpleResponse" : { "textToSpeech" : "<speak>Here are <say-as interpet-as=\"characters\">SSML</say-as> examples.Here is a buzzing fly <audio src=\"https://actions.google.com/sounds/v1/animals/buzzing_fly.ogg\"></audio>and here's a short pause <break time=\"800ms\"/></speak>" } }, { "simpleResponse" : { "textToSpeech" : "Which response would you like to see next?" } } ] } } } ] }

See the SSML reference documentation for more information.

Sound library

We provide a variety of free, short sounds in our sound library . These sounds are hosted for you, so all you need to do is include them in your SSML.